S3 - Simple Storage Service

Docs - https://docs.aws.amazon.com/s3/

Console - https://console.aws.amazon.com/s3/home or https://s3.console.aws.amazon.com/s3/home

Quotas - https://docs.aws.amazon.com/general/latest/gr/s3.html

Public S3 buckets - https://buckets.grayhatwarfare.com

Alternative CLI - https://github.com/peak/s5cmd

From How an empty S3 bucket can make your AWS bill explode:

When executing a lot of requests to S3, make sure to explicitly specify the AWS region. This way you will avoid additional costs of S3 API redirects.

Data Transfer from Amazon S3 Glacier Vaults to Amazon S3 - https://aws.amazon.com/solutions/implementations/data-transfer-from-amazon-s3-glacier-vaults-to-amazon-s3/

How to find the AWS Account ID of any S3 Bucket - https://tracebit.com/blog/how-to-find-the-aws-account-id-of-any-s3-bucket

Main characteristics

Object storage, accessible over a REST API using HTTP.

It's easy to integrate into applications using SDKs.

It's a regional service, but the bucket name must be globally unique since we use it in the bucket endpoint URLs. See bucket naming rules.

To reduce latency, create the bucket at the region that is closest to your users.

An S3 object consists of globally unique identifier (key), some metadata and the data itself.

Buckets have a flat structure, there's no hierarchy with folders like in a traditional file system. You can mimic folders by using prefixes at the object keys (ie the object name). For example, an object key can be photos/IMG_20160911_035952.jpg, this way we have a "photos" folder at the console.

You cannot delete an S3 bucket containing objects. You need to empty it first, including the object versions.

Provides strong read-after-write consistency, see https://aws.amazon.com/s3/consistency and the announcement.

S3 use cases

From https://www.youtube.com/watch?v=-zc16KhOILM

- Backups (eg database backups)

- We can set rules to delete objects that are no longer needed, eg delete database backups older than 7 days

- We can have versions of the same file

- Static website hosting

- Note that "Amazon S3 website endpoints do not support HTTPS. If you want to use HTTPS, you can use Amazon CloudFront to serve a static website hosted on Amazon S3"

- Share files to logged/authorized users with presigned URLs. URLs expire

- Long term archiving

- For files that are accessed infrequently, but need to be saved for years

- There are various storage classes/tiers: Standard-Infrequent Access, Glacier...

- Compliance with regulations

- Eg comply with the GDPR by storing data at the EU, set encryption etc.

- FTP server

- Application cache

- Upload files

- Since we are not uploading to our server, we don't need to worry about CPU, bandwidth or availability

- Query data and perform analytics with Athena, which allows to query data in S3 using SQL

- S3 triggers/events, eg to fire a lambda functions

- Partial data access with S3 Select, which allows to retrieve a subset of a file instead of the whole file

Security

From How to avoid S3 data leaks? - https://cloudonaut.io/s3-security-best-practice

- Use IAM policies to control access to your data stored in S3. Avoid using bucket policies whenever possible. Do not even think about using a bucket or object ACLs.

- Enable Block Public Access. If possible, do so for your AWS account to protect all buckets. If not, make sure to enable Block Public Access for all existing and future S3 buckets containing private data.

- Do not mix private and public data within an S3 bucket. Also make sure, the bucket name includes the data classification.

- Enable email notifications from Trusted Advisor to get notified of unintended changes to your bucket policies and bucket ACLs. Especially, watch out for the Amazon S3 Bucket Permissions check.

Avoid bucket policies if possible and never use ACLs

From How to avoid S3 data leaks? - https://cloudonaut.io/s3-security-best-practice/#Rule-2-Enable-Block-Public-Access

- Use an IAM policy to grant access to your S3 bucket whenever the caller can authenticate as an IAM principal (user or role).

- Use a bucket policy only in exceptional cases when using an IAM policy is not an option (e.g., to allow read access from the whole organization, …).

- Never use bucket or object ACLs.

IAM Policies and Bucket Policies and ACLs! Oh, My! (Controlling Access to S3 Resources) - https://aws.amazon.com/blogs/security/iam-policies-and-bucket-policies-and-acls-oh-my-controlling-access-to-s3-resources/

- If you’re more interested in “What can this user do in AWS?”, then IAM policies are probably the way to go. You can answer this question by looking up an IAM user and then examining their IAM policies to see what rights they have.

- If you’re more interested in “Who can access this S3 bucket?”, then S3 bucket policies will likely suit you better. You can answer this question by looking up a bucket and examining the bucket policy.

A majority of modern use cases in Amazon S3 no longer require the use of ACLs. We recommend that you keep ACLs disabled, except in unusual circumstances where you need to control access for each object individually.

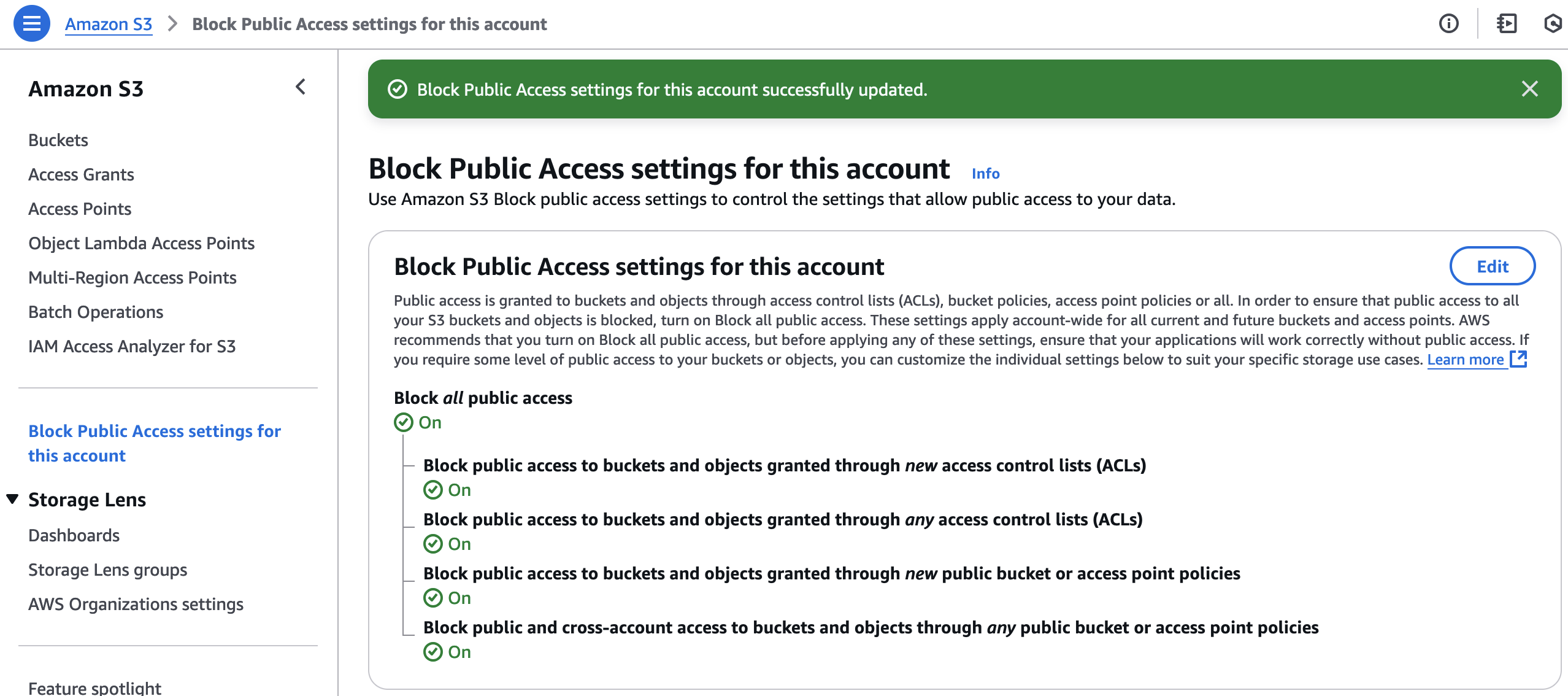

Block all public access for all buckets in the account

Documentation: Blocking public access to your Amazon S3 storage.

At the S3 dashboard, at the "Block Public Access settings for this account" page, enable "Block all public access" to disable public access for all S3 buckets in the account:

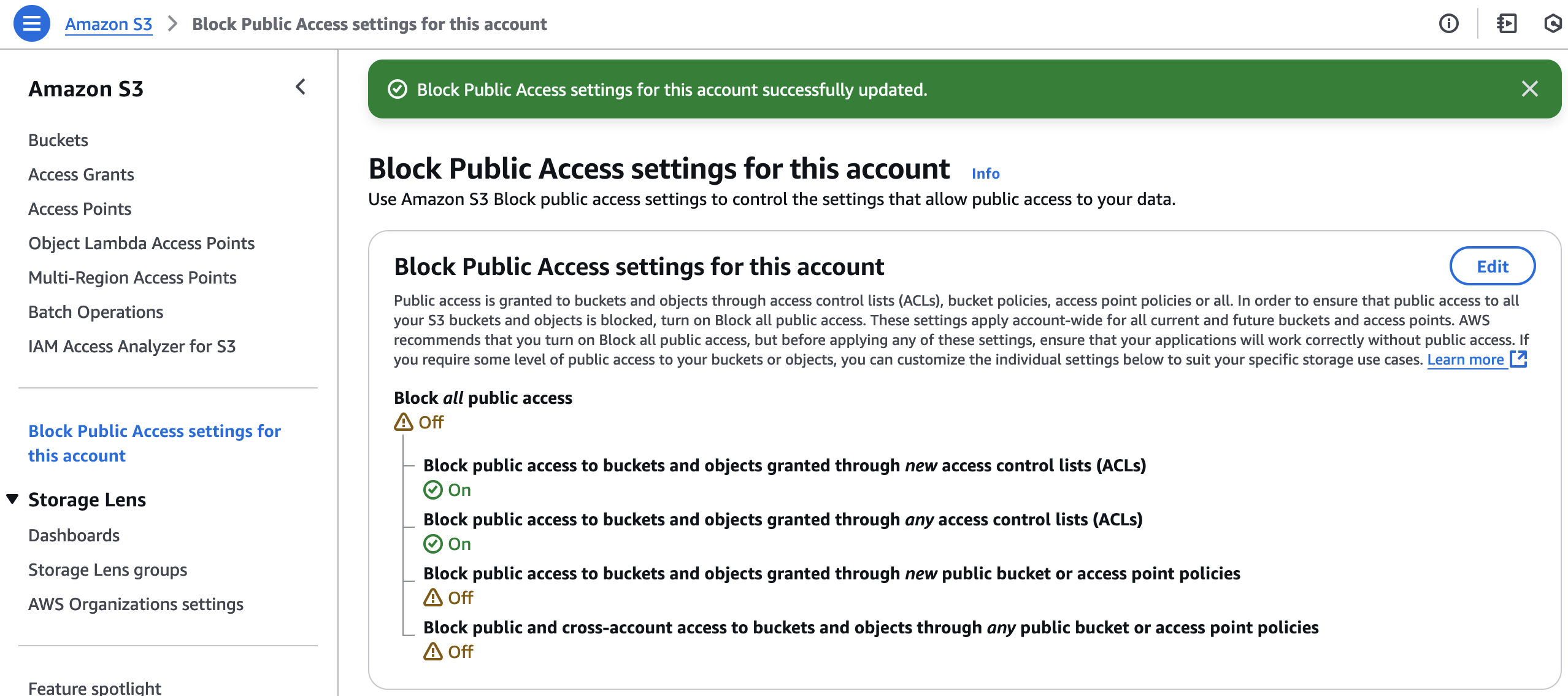

In case some of your buckets need to have public access, you can still block ACLs at the account level:

Can be done with Terraform (docs):

resource "aws_s3_account_public_access_block" "example" {

block_public_acls = true

block_public_policy = true

ignore_public_acls = true

restrict_public_buckets = true

}

Versioning

Versioning status can be either enabled, suspended, or disabled (see aws_s3_bucket_versioning). Once versioning is enabled, in cannot be disabled, only suspended. From https://docs.aws.amazon.com/AmazonS3/latest/userguide/Versioning.html#versioning-states:

After you version-enable a bucket, it can never return to an unversioned state. But you can suspend versioning on that bucket.

Prevent accidental deletion of objects

Enable versioning

With versioning enabled you can retrieve and restore every version of an object, for example if you deleted or overwrote it accidentally.

How can I retrieve an Amazon S3 object that was deleted in a versioning-enabled bucket? - https://repost.aws/knowledge-center/s3-configuration-undelete

Enable MFA delete

https://docs.aws.amazon.com/AmazonS3/latest/userguide/MultiFactorAuthenticationDelete.html

https://repost.aws/knowledge-center/s3-bucket-mfa-delete

https://medium.com/@kiran_c/aws-dva-10-s3-security-e2d142670a3b

MFA delete requires versioning to be enabled. You can't enable MFA delete using the console, you need to use the CLI (see commands below), an SDK or the REST API. MFA delete can only be enabled by the root user (the bucket owner).

When enabled, only the root user will be able to permanently delete objects or change the versioning state of the bucket. To permanently delete an object version, you must include the x-amz-mfa request header.

Compatible services

- https://www.cloudflare.com/products/r2/

- https://wasabi.com/s3-compatible-cloud-storage/

- https://www.backblaze.com/b2/docs/s3_compatible_api.html

CLI - s3api

Commands:

- v2: https://docs.aws.amazon.com/cli/latest/reference/s3api/index.html

- v1: https://docs.aws.amazon.com/cli/latest/reference/s3api/index.html#available-commands

Examples - https://github.com/aws/aws-cli/tree/develop/awscli/examples/s3api

aws s3api create-bucket --bucket <bucket-name> --acl <acl> --region <region>

aws s3api create-bucket --bucket my-bucket --region us-east-1

Response

{

"Location": "/my-bucket"

}

Delete bucket (must be empty)

aws s3api delete-bucket --bucket <bucket-name>

aws s3api put-object --bucket <bucket-name> --key file.txt --body file.txt

aws s3api delete-object --bucket <bucket-name> --key <key>

Enable bucket versioning (docs):

aws s3api put-bucket-versioning --bucket <bucket-name> \

--versioning-configuration Status=Enabled

We can also enable MFA delete in the bucket versioning configuration:

aws s3api put-bucket-versioning --bucket <bucket-name> \

--versioning-configuration Status=Enabled,MFADelete=Enabled \

--mfa "arn:aws:iam::<account-id>:mfa/<device> <otp-code>"

List object versions (docs):

aws s3api list-object-versions --bucket <bucket-name>

Archived objects

Restore an archived object from (eg) Glacier (docs):

aws s3api restore-object --bucket <bucket-name> \

--key myfile.pdf \

--restore-request Days=1,,GlacierJobParameters={"Tier"="Expedited"}

You'll need to wait before downloading it. To check if the file can be downloaded check the metadata:

aws s3api head-object --bucket <bucket-name> --key myfile.pdf

If Restore is ongoing-request="true", the object restoration is still in progress. If it's false, is done and you can download the object with aws s3 cp.

Static website hosting

(See AWS in Action chapter 7.)

To host a static website using S3, first create a bucket:

aws s3 mb index.html s3://my-bucket

Then upload the index.html file:

aws s3 cp index.html s3://my-bucket

Create a bucket policy JSON file:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "PublicRead",

"Effect": "Allow",

"Principal": "*",

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::my-bucket/*"

}

]

}

Don't forget to replace my-bucket. Then set the bucket policy:

aws s3api put-bucket-policy --bucket my-bucket --policy file://bucketpolicy.json

When running this command with the "Block public access" enabled (the default) you get the error An error occurred (AccessDenied) when calling the PutBucketPolicy operation: User: arn:aws:iam::account-id:user/Administrator is not authorized to perform: s3:PutBucketPolicy on resource: "arn:aws:s3:::bucket-name" because public policies are blocked by the BlockPublicPolicy block public access setting.. To successfully run the command, uncheck the last two checkboxes in "Block public access" at the web console: "Block public access to buckets and objects granted through new public bucket or access point policies" and "Block public and cross-account access to buckets and objects through any public bucket or access point policies". The two other checkboxes about "ACLs" can be left checked. Note that doing this is also required to access the HTML files with the browser, otherwise you get a 403 Forbidden error. See Setting permissions for website access.

Finally enable "Static website hosting" and set the index document:

aws s3 website s3://my-bucket --index-document index.html

The website URL depends on the region, see Amazon S3 website endpoints. In us-east-1 is like http://my-bucket.s3-website-us-east-1.amazonaws.com.

CLI - s3

Reference:

- v2: https://docs.aws.amazon.com/cli/latest/reference/s3/index.html

- v1: https://docs.aws.amazon.com/cli/latest/reference/s3/index.html

Examples - https://github.com/aws/aws-cli/tree/develop/awscli/examples/s3

Create bucket (docs):

aws s3 mb s3://my-bucket-name

Delete bucket (docs):

aws s3 rb s3://my-bucket --force

Note that the --force option only deletes the non-versioned objects in the bucket before the bucket is deleted. Thus, if there are versioned object, the delete will fail.

List buckets (docs):

aws s3 ls

List files in bucket (docs):

aws s3 ls s3://my-bucket

Delete all files in bucket (docs):

aws s3 rm --recursive s3://my-bucket/

Upload single file to bucket (docs):

aws s3 cp file.txt s3://my-bucket

aws s3 cp file.txt s3://my-bucket/another-file-name.txt

aws s3 cp file.txt s3://my-bucket --storage-class GLACIER

Conditional writes: https://aws.amazon.com/about-aws/whats-new/2024/08/amazon-s3-conditional-writes

Upload multiple files (a directory) to bucket (docs):

aws s3 sync <folder> s3://my-bucket [--profile <profile>] [--delete]

Note that you can upload to a subfolder:

aws s3 sync . s3://my-bucket/images

Download file (docs):

aws s3 cp s3://my-bucket/code/lambda_function.py ~/Downloads

Download folder (docs):

aws s3 cp --recursive s3://my-bucket/images ~/Downloads/images

Performance

Best practices design patterns: optimizing Amazon S3 performance - https://docs.aws.amazon.com/AmazonS3/latest/userguide/optimizing-performance.html

You can use prefixes to improve performance, see https://aws.amazon.com/about-aws/whats-new/2018/07/amazon-s3-announces-increased-request-rate-performance/. Each S3 prefix can support at least 3,500 requests per second to add data and 5,500 requests per second. Also see AWS in Action p. 225.

Performance scales per prefix, so you can use as many prefixes as you need in parallel to achieve the required throughput. There are no limits to the number of prefixes.

Add a random prefix to the key names - https://stackoverflow.com/questions/43035449/add-a-random-prefix-to-the-key-names-to-improve-s3-performance

Encryption

https://docs.aws.amazon.com/AmazonS3/latest/userguide/UsingEncryption.html

Server-side encryption:

- SSE-C: you (the Customer) manage the key. You need to provide the key to decrypt an object.

- SSE-S3: the default. See Amazon S3 now automatically encrypts all new objects.

- SSE-KMS: use AWS KMS keys.

- DSSE-KMS: dual-layer server-side encryption with AWS KMS keys.

Client-side encryption:

- CSE - Customer: you encrypt client-side.

Policies to enforce encryption: https://aws.amazon.com/blogs/security/how-to-prevent-uploads-of-unencrypted-objects-to-amazon-s3

VPC endpoints

To access S3, instances in public subnets can use the public S3 endpoints. For instances in private subnets, we could use a NAT Gateway, but there's a better way that doesn't use the public internet: VPC endpoints. Instances use private IPs to access S3.

There are two types of VPC endpoints: gateway endpoints and interface endpoints. See comparison at Types of VPC endpoints for Amazon S3.

- Gateway endpoints for Amazon S3. Free.

- Interface endpoints for Amazon S3. Not free. Uses AWS PrivateLink.

S3 Event Notifications

Docs: https://docs.aws.amazon.com/AmazonS3/latest/userguide/EventNotifications.html

Announcement: https://aws.amazon.com/blogs/aws/s3-event-notification

Destinations:

- SNS topics

- SQS queues

- Lambda

- EventBridge

You can only configure one notification per event type (create, delete...) per bucket. If you need multiple event notifications for the same event type, use SNS to fanout a message to multiple SNS endpoints (Lambda functions, SQS queues...). See AWS S3 Event Notification: Same Event, Different Destination and Fanout S3 Event Notifications to Multiple Endpoints.

At the S3 console, you can create an event notification at the Properties tab → Event notifications → Create event notification. If the event notification triggers a Lambda function, you can also create it at the Lambda function console: at the "Function overview" (the diagram at the top) click "+ Add trigger".

SNS - Send an email when an object is uploaded to an S3 bucket

Create the SNS topic

Go to the SNS console → Topics and click 'Create topic'. Then set:

- Type: Standard

- Name: Send-Email-On-S3-Upload

- Display name: this will be the email name (e.g.

Something <no-reply@sns.amazonaws.com>) - Click 'Create topic'

Create the SNS subscription

Go to the SNS console → Subscriptions and click 'Create subscription'. Then set:

- Topic ARN:

arn:aws:sns:us-east-1:<account-id>:Send-Email-On-S3-Upload - Protocol: Email

- Endpoint: set your email address

- Click 'Create subscription'

Go to your email client (eg Gmail) and confirm the subscription by clicking the 'Confirm subscription' link. The page that opens will say 'Subscription confirmed!'.

Configure access policy for the SNS topic

Replace the following values in the JSON policy:

Resource: the SNS topic ARNaws:SourceArn: the S3 bucket ARNaws:SourceAccount: the account ID of the S3 bucket owner

{

"Version": "2012-10-17",

"Id": "example-ID",

"Statement": [

{

"Sid": "SNS topic policy",

"Effect": "Allow",

"Principal": {

"Service": "s3.amazonaws.com"

},

"Action": ["SNS:Publish"],

"Resource": "arn:aws:sns:us-east-1:<account-id>:Send-Email-On-S3-Upload",

"Condition": {

"ArnLike": {

"aws:SourceArn": "arn:aws:s3:::<bucket-name>"

},

"StringEquals": {

"aws:SourceAccount": "<bucket-owner-account-id>"

}

}

}

]

}

This policy grants the S3 service the "SNS:Publish" API action over the SNS topic Send-Email-On-S3-Upload, specifying the S3 bucket and the AWS account in the conditions. If you don't have this policy, when creating the event notification below you get an "Unknown Error" (the "API response" is "Unable to validate the following destination configurations").

Go to the SNS topic, click 'Edit', copy the policy at the 'Access policy' field and click 'Save changes'.

Create the event notification in S3

Go to the S3 console, navigate to the bucket and then go to the 'Properties' tab. At 'Event notifications', click 'Create event notification' and set:

- Event name: SendEmailOnUpload

- Event types: All object create events (

s3:ObjectCreated:*) - Destination: SNS topic

- Specify SNS topic: Choose from your SNS topics

- SNS topic: Send-Email-On-S3-Upload

- Click 'Save changes'

When you upload a file to the S3 bucket you should receive an email.

Hosting a static website with S3 and CloudFront - Single, private S3 bucket - Manually

This solution makes the S3 bucket private, and the S3 content is only available through CloudFront, as explained at Restricting access to an Amazon S3 origin.

Also, this solution does not use 'Static website hosting' because the S3 bucket is private and it's content is served by CloudFront, which uses the S3 REST API to retrieve the files. See Key differences between a website endpoint and a REST API endpoint.

Note that you must use CloudFront to have HTTPS. See Hosting a static website using Amazon S3:

Amazon S3 website endpoints do not support HTTPS. If you want to use HTTPS, you can use Amazon CloudFront to serve a static website hosted on Amazon S3. For more information, see How do I use CloudFront to serve HTTPS requests for my Amazon S3 bucket? To use HTTPS with a custom domain, see Configuring a static website using a custom domain registered with Route 53.

Resources

- (2021) https://www.youtube.com/watch?v=CQ8vzm1kYkM - https://aws.plainenglish.io/how-to-deploy-react-app-with-s3-and-cloudfront-6d170172cd58 ← This is the main tutorial I followed

- Configuring a static website using a custom domain registered with Route 53 - https://docs.aws.amazon.com/AmazonS3/latest/userguide/website-hosting-custom-domain-walkthrough.html - Note that it uses "Static website hosting" on S3, making the S3 bucket public. And it creates two S3 buckets (one for the root domain and another for www). CloudFront is added later at the next tutorial).

- Speeding up your website with Amazon CloudFront - https://docs.aws.amazon.com/AmazonS3/latest/userguide/website-hosting-cloudfront-walkthrough.html

- Using various origins with CloudFront distributions - https://docs.aws.amazon.com/AmazonCloudFront/latest/DeveloperGuide/DownloadDistS3AndCustomOrigins.html#using-s3-as-origin

- (2019) How to deploy your React App with AWS S3 (HTTPS, Custom Domain, a CDN and continuous deployment) - https://medium.com/dailyjs/a-guide-to-deploying-your-react-app-with-aws-s3-including-https-a-custom-domain-a-cdn-and-58245251f081 - Sets a custom domain name with Route 53

- (2016) Deploying create-react-app to S3 and CloudFront - https://wolovim.medium.com/deploying-create-react-app-to-s3-or-cloudfront-48dae4ce0af - This is the guide recommended at the Create React App docs

Steps

TODO Here we have to explain how to create a new User with policies CloudFrontFullAccess and AmazonS3FullAccess, and then run aws configure to set the user access key and secret for the CLI. See https://medium.com/dailyjs/a-guide-to-deploying-your-react-app-with-aws-s3-including-https-a-custom-domain-a-cdn-and-58245251f081

Create a S3 bucket. Go to the S3 console and click Buckets → Create bucket. Set the bucket name and region. Everything else should be at the default value:

- 'Object Ownership': check 'ACLs disabled (recommended)'.

- 'Block Public Access settings for this bucket': check 'Block all public access' since the user will access the content through CloudFront. Most tutorials tell you to uncheck all checkboxes (ie make all S3 content public), but it's not required.

- 'Bucket Versioning' → Disable.

- This tutorial suggests to enable it "to see any changes we made or roll back to a version before the change if we do not like it".

- 'Default encryption' → Disable.

- Advanced settings

- 'Object Lock' → Disable.

Create a CloudFront distribution. Go to the CloudFront console and click 'Create a CloudFront distribution'. (The article How do I use CloudFront to serve HTTPS requests for my Amazon S3 bucket? talks about this.)

- At 'Origin domain' select the one you've just created at S3. Should be something like

<s3-bucket-name>.s3.<region>.amazonaws.com. - Make the S3 bucket private by setting 'Origin access' to 'Origin access control settings (recommended)'. This "limits the S3 bucket access to only authenticated requests from CloudFront". See Restricting access to an Amazon S3 origin. Origin access control (OAC) is recommended over origin access identity (OAI).

- Click 'Create control setting'. At the dialog that opens, leave 'Sign requests (recommended)' checked and click 'Create'.

- Note that "You must update the S3 bucket policy. CloudFront will provide you with the policy statement after creating the distribution."

- Set 'Viewer protocol policy' to 'Redirect HTTP to HTTPS".

- Set 'Default root object' to

index.html. - Click 'Create distribution'.

We need to update the S3 bucket policy. A blue banner says "Complete distribution configuration by allowing read access to CloudFront origin access control in your policy statement.". Copy the policy from the banner. It's something like:

{

"Version": "2008-10-17",

"Id": "PolicyForCloudFrontPrivateContent",

"Statement": [

{

"Sid": "AllowCloudFrontServicePrincipal",

"Effect": "Allow",

"Principal": {

"Service": "cloudfront.amazonaws.com"

},

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::the-s3-bucket-name/*",

"Condition": {

"StringEquals": {

"AWS:SourceArn": "arn:aws:cloudfront::564627046728:distribution/Q5H1J6OCBE3KIO"

}

}

}

]

}

To update the bucket policy, go to the S3 bucket → Permissions and paste the policy JSON to the 'Bucket policy' textarea.

Note that there's no need to configure the bucket as static website because S3 will store the files but not serve the website (it will be served by CloudFront).

To upload files to the S3 bucket do: aws s3 sync <build-folder> s3://<s3-bucket-name> [--profile <profile>]. Files should now appear on the S3 console, at the Objects tab.

You should now be able to visit the CloudFront URL (aka the 'Distribution domain name', something like https://d1p1ex8s7fge20.cloudfront.net) with your browser and see the site live :)

Every time we update the S3 bucket content we need to invalidate the CloudFront edge caches to replace the files. Either use the command aws cloudfront create-invalidation --distribution-id <distribution-id> --paths '/*' [--profile <profile>], or do this at the console.

Fix 403 Forbidden error for SPA

If you have a SPA (eg a React app created with CRA) and you visit xyz.cloudfront.net/about and refresh, it will not work; will say 'AccessDenied' with response code 403.

To fix this, at the CloudFront distribution, set a custom error response at the Error pages tab with this values:

- HTTP error code: 403 Forbidden.

- Error caching minimum TTL: something like 60 seconds? Default is 10.

- Is the "The minimum amount of time that you want CloudFront to cache error responses from your origin server (ie S3)" source.

- Also see Controlling how long CloudFront caches errors.

- Customize error response: Yes.

- Response page path:

/index.html(must begin with/). - HTTP Response code: 200 OK.

- Response page path:

We may also need a similar custom error response for 404.

Then perform an invalidation to the path /*.

See https://blog.nicera.in/2020/08/hosting-react-app-on-s3-cloudfront-with-react-router-404-fix/ for more. Also see nice comparison of different approaches to solve this at https://stackoverflow.com/a/72450228/4034572.

Custom domain

Resources:

- Speeding up your website with Amazon CloudFront - https://docs.aws.amazon.com/AmazonS3/latest/userguide/website-hosting-cloudfront-walkthrough.html

- Requirements for using alternate domain names - https://docs.aws.amazon.com/AmazonCloudFront/latest/DeveloperGuide/CNAMEs.html#alternate-domain-names-requirements

-

To add an alternate domain name (CNAME) to a CloudFront distribution, you must attach to your distribution a trusted, valid SSL/TLS certificate that covers the alternate domain name. This ensures that only people with access to your domain’s certificate can associate with CloudFront a CNAME related to your domain.

-

- How to deploy your React App with AWS S3 (HTTPS, Custom Domain, a CDN and continuous deployment) - https://medium.com/dailyjs/a-guide-to-deploying-your-react-app-with-aws-s3-including-https-a-custom-domain-a-cdn-and-58245251f081

- How to Create S3 Static Website With HTTPS - https://tynick.com/blog/05-30-2019/how-to-create-s3-static-website-with-https-its-so-easy/

At the Route 53 console, purchase a domain.

At the AWS Certificate Manager console, create a certificate with the following steps:

- Important: the certificate must be in the US East (N. Virginia) Region (us-east-1). Make sure that you have the 'N. Virginia' region selected at the top navbar. See why at Supported Regions:

-

To use an ACM certificate with Amazon CloudFront, you must request or import the certificate in the US East (N. Virginia) region. ACM certificates in this region that are associated with a CloudFront distribution are distributed to all the geographic locations configured for that distribution.

-

- Start by clicking 'Request a certificate'. At the page that opens (Certificate type) select 'Request a public certificate' and click 'Next'.

- At the page that opens (Request public certificate), set:

- Domain names. There are 2 options:

- Wildcard: you can "use an asterisk (*) to request a wildcard certificate to protect several sites in the same domain. For example,

*.example.comprotectswww.example.com,site.example.com, andimages.example.com". - If you only want some domains like

example.comandwww.example.com, set these domains one by one.

- Wildcard: you can "use an asterisk (*) to request a wildcard certificate to protect several sites in the same domain. For example,

- At 'Select validation method' choose 'DNS validation - recommended'.

- Click 'Request'.

- Domain names. There are 2 options:

A blue banner with the message "Successfully requested certificate with ID some-id. A certificate request with a status of pending validation has been created. Further action is needed to complete the validation and approval of the certificate.".

At the ACM console → Certificates, the certificate Status is 'Pending validation'. We need to validate the ownership of the domain to complete the certificate request. To do so, at the ACM console go to the new certificate and click 'Create records in Route 53'. At the page that opens (Create DNS records in Amazon Route 53), click 'Create records'. This creates two DNS records of type CNAME for the domain at Route 53. At the Route 53 console, you will see that the 'Record count' for the domain goes from 2 to 4 after this step. Right after doing this, at the ACM console, you still see that the domain Status is still 'Pending validation', but it will change to '✓ Issued' (with green color) some minutes later.

Go to the CloudFront console and click the distribution. At the 'General' tab, 'Settings' pane, click 'Edit'.

At 'Alternate domain name (CNAME)', set the domains used at the ACM certificate (eg example.com and www.example.com).

At the dropdown 'Custom SSL certificate', choose the ACM certificate just created.

Click 'Save changes'.

At the distribution 'General' tab, 'Details' pane, the 'Last modified' will be 'Deploying', and after some minutes later it will change to a date (eg 'October 7, 2022 at 4:53:47 PM UTC').

Go to the Route 53 console → Hosted zones and choose the zone. Click 'Create record'. Leave 'Record name' empty. Set 'Record type' to 'A'. Enable the switch 'Alias' and set 'Route traffic to' to 'Alias to CloudFront distribution'. Choose the distribution from the dropdown. At 'Routing policy' leave it to 'Simple routing'. You need to repeat this for www. Click 'Add another record' and set the same values except for 'Record name', which should be 'www'. Click 'Create records'. Wait a few minutes for changes to take effect.

Redirect www to non-www

To redirect the www.example.com/* request to example.com/* follow the instructions at the CloudFront doc.

Logging

See Step 5: Configure logging for website traffic - "If you want to track the number of visitors accessing your website, you can optionally enable logging"

Hosting a static website with S3 and CloudFront - Two S3 buckets with 'Static website hosting'

- Redirecting a domain with HTTPS using Amazon S3 and CloudFront - https://simonecarletti.com/blog/2016/08/redirect-domain-https-amazon-cloudfront/ - Creates two S3 buckets.

- Cloudfront redirect www to naked domain with ssl [closed] - https://stackoverflow.com/questions/28675620/cloudfront-redirect-www-to-naked-domain-with-ssl

Hosting a static website with S3 and CloudFront - With Terraform

Resources

- https://jarombek.com/blog/feb-15-2020-s3-react

- https://www.karanpratapsingh.com/blog/deploy-react-s3-cloudfront - https://github.com/karanpratapsingh/tutorials/tree/master/react/s3-cloudfront

- Terraform module for building and deploying Next.js apps to AWS. Supports SSR (Lambda), Static (S3) and API (Lambda) pages. - https://github.com/milliHQ/terraform-aws-next-js

- https://github.com/bregman-arie/devops-exercises/blob/master/topics/aws/exercises/s3/new_bucket/terraform/main.tf

Hosting a static website with S3 and CloudFront - With CloudFormation

Resources

- Getting started with a secure static website - https://docs.aws.amazon.com/AmazonCloudFront/latest/DeveloperGuide/getting-started-secure-static-website-cloudformation-template.html - https://github.com/aws-samples/amazon-cloudfront-secure-static-site

- https://medium.com/swlh/create-deploy-a-serverless-react-app-to-s3-cloudfront-on-aws-4f83fa605ff0

Presigned URLs

https://docs.aws.amazon.com/AmazonS3/latest/userguide/using-presigned-url.html

Share objects

https://docs.aws.amazon.com/AmazonS3/latest/userguide/ShareObjectPreSignedURL.html

aws s3 presign s3://my-bucket/mydoc.txt --expires-in 86400

Returns a URL that can be used to download the file.

File uploads

Patterns for building an API to upload files to Amazon S3 - https://aws.amazon.com/blogs/compute/patterns-for-building-an-api-to-upload-files-to-amazon-s3/

Uploading to Amazon S3 directly from a web or mobile application - https://aws.amazon.com/blogs/compute/uploading-to-amazon-s3-directly-from-a-web-or-mobile-application/

https://docs.aws.amazon.com/AmazonS3/latest/userguide/PresignedUrlUploadObject.html

https://docs.aws.amazon.com/AmazonS3/latest/API/s3_example_s3_Scenario_PresignedUrl_section.html

https://sst.dev/docs/start/aws/nextjs#3-create-an-upload-form

https://sst.dev/docs/start/aws/astro#3-create-an-upload-form

S3 and CloudFront Signed URLs - https://www.s3-cloudfront-signed-urls-book.com/ - Some content is available for free at https://www.s3-cloudfront-signed-urls-book.com/serverless-example-app/

Differences between PUT and POST S3 signed URLs - https://advancedweb.hu/differences-between-put-and-post-s3-signed-urls/

Use POST URLs. While PUT URLs are simpler to use, they lack some features POST URLs provide. And since constructing a form and submitting from JavaScript is just a few lines of code, it shouldn't be a problem.

AWS S3 generate_presigned_url vs generate_presigned_post for uploading files - https://stackoverflow.com/questions/65198959/aws-s3-generate-presigned-url-vs-generate-presigned-post-for-uploading-files

Presigned URLs code examples - https://boto3.amazonaws.com/v1/documentation/api/latest/guide/s3-presigned-urls.html

PUT

s3_client.generate_presigned_url

How to use S3 PUT signed URLs - https://advancedweb.hu/how-to-use-s3-put-signed-urls/

POST

s3_client.generate_presigned_post

Accepts conditions to restrict the content type and length, for example.

How to use S3 POST signed URLs - https://advancedweb.hu/how-to-use-s3-post-signed-urls/