Terraform

https://developer.hashicorp.com/terraform

Forum - https://discuss.hashicorp.com/c/terraform-core/27

Copilot instructions:

- https://github.com/github/awesome-copilot/blob/main/instructions/terraform.instructions.md

- https://github.com/github/awesome-copilot/blob/main/instructions/generate-modern-terraform-code-for-azure.instructions.md

Reddit - https://www.reddit.com/r/Terraform/

https://github.com/topics/terraform

https://github.com/topics/terraform-module

Cheatsheet: https://cheat-sheets.nicwortel.nl/terraform-cheat-sheet.pdf

Terraform is a binary that translates the contents of your configuration files into API calls to cloud providers. (Page 19 of Terraform: Up and Running)

Terraform's primary function is to create, modify, and destroy infrastructure resources to match the desired state described in a Terraform configuration. source

Characteristics

- Cross platform: works on Windows, macOS and Linux.

- Multi-cloud or cloud-agnostic: supports multiple cloud providers like AWS, GCP, Azure etc.

- Declarative: you describe the infrastructure you want, and Terraform figures out how to create it. The code represents the state of your infrastructure.

- Domain Specific Language: concise and uniform code. However, it can be more difficult to do some things like for loops compared to general purpose languages like TypeScript.

- Idempotent: we can execute it multiple times and we get the same result.

- Agentless: no need to install any software on the servers we manage.

- Masterless: no need to have a master server running that stores the state and distributes updates to the managed servers.

- Immutable (for some resources): when we need to modify a resource, instead of updating it, it creates a new one.

.gitignore

https://github.com/github/gitignore/blob/main/Terraform.gitignore → Uncomment line 33 to exclude the tfplan plan file.

https://developer.hashicorp.com/terraform/language/style#gitignore

We should ignore:

- The

.terraformdirectory, since it contains binary files we don't want to store in Git. Also, the binaries are OS-specific and they depend on the CPU architecture (see releases), so a specific version needs to be downloaded on each machine. - State files (

*.tfstate), since state contains secrets and passwords. Another reason is that if we deploy our infrastructure multiple times (dev, test, staging, prod), we need a different state file for each, so it makes sense to store them outside of the repository, decoupled from our code. - Any

*.tfvarsfiles that contain sensitive information.

We should commit:

.terraform.lock.hcl: the dependency lock file guarantees that the next time we doterraform initin another machine we download the same versions of the providers and modules.

.terraform.lock.hcl

Is a dependency lock file that ensures that multiple people use the same versions of the providers and modules on different machines and CI/CD pipelines. It has the same function than the package-lock.json.

It contains the exact versions (eg version = "2.5.1") of the providers and modules to use, and the hashes to verify the integrity.

Is created automatically by init if it doesn't exist. And if it exists, it's used by init to install the same versions.

CLI

Don't use the official shell tab-completion (installed with terraform -install-autocomplete), because it only adds tab-completion of commands (eg apply), but not options (eg -auto-approve).

Instead, use the Oh My Zsh plugin, which autocompletes the options after typing just - + tab, showing a description of each argument too.

Note that there's also an OpenTofu plugin.

Workflow: init → plan → apply → destroy

List commands:

terraform

# or

terraform -help

Command help:

terraform init -help

init

- Doc: https://developer.hashicorp.com/terraform/cli/commands/init

- https://developer.hashicorp.com/terraform/cli/init

The first command we must always run. It initializes a working directory with this steps:

- Initializes the backend (state).

- Creates the hidden

.terraformdirectory, which contains cached providers plugins and modules.- You don't commit the

.terraformdirectory in version control. This makes sense since providers are binaries, which we don't put in version control, and they are OS-specific, ie you get a different binary on a x86 vs an ARM processor.

- You don't commit the

- Downloads provider plugins and modules.

- If the dependency lock file

.terraform.lock.hclexists, it downloads the versions specified there. Otherwise, it downloads the versions that match the constraints and then creates the.terraform.lock.hclfile.

- If the dependency lock file

- Creates the dependency lock file

.terraform.lock.hclif it doesn't exist yet. This file which ensures that every person runningterraform initgets the same versions of the provider and modules, likepackage-lock.jsondoes.

Is idempotent: it is safe to call it multiple times, and will have no effect if no changes are required. Thus, you can call it any time.

Since the .terraform directory is not checked into version control, you run init when you checkout a git repository, or in the CI/CD pipeline before running the other commands.

You need to run it again when you add, remove or change versions of providers or modules, and when you change the state backend.

To upgrade provider versions use terraform init -upgrade. It picks the latest version that meets the version constraints set in the code.

plan

- Doc: https://developer.hashicorp.com/terraform/cli/commands/plan

- https://developer.hashicorp.com/terraform/cli/run#planning

- https://developer.hashicorp.com/terraform/tutorials/cli/plan

Does a state refresh, that is, it checks the actual infrastructure resources using providers (which call cloud APIs), and compares them with the current configuration code, to get the difference between the current and desired state. Once it has the difference, it presents a description (plan) of the changes necessary to achieve the desired state. It does not perform any actual changes to real world infrastructure.

Actions are colored (green, red, yellow) and use a symbol:

+Create-Destroy~Update-/+Re-create or replace (destroy and then create)- Be careful: you can loose data!

- The destroy operation can fail. For example, if we try to re-create an S3 bucket that is not empty.

You can optionally save the plan to a file with terraform plan -out=tfplan, and pass it to apply later. The file is not human-readable, but you can use the show command (terraform show tfplan) to inspect the plan.

Note that terraform plan is not just a dry-run of apply, see unknown values (values with "known after apply" at the plan):

- https://log.martinatkins.me/2021/06/14/terraform-plan-unknown-values/

- https://github.com/hashicorp/terraform/issues/30937 (blocker of https://github.com/hashicorp/terraform-provider-kubernetes/issues/1775)

- https://github.com/opentofu/opentofu/pull/3539

The design goal for unknown values is that Terraform should always be able to produce some sort of plan, even if parts of it are not yet known, and then it's up to the user to review the plan and decide either to accept the risk that the unknown values might not be what's expected, or to apply changes from a smaller part of the configuration (e.g. using

-target) in order to learn more final values and thus produce a plan with fewer unknowns.

apply

- Doc: https://developer.hashicorp.com/terraform/cli/commands/apply

- https://developer.hashicorp.com/terraform/cli/run#applying

- https://developer.hashicorp.com/terraform/tutorials/cli/apply

It actually executes a plan on real world infrastructure, to bring it to the desired state.

We can pass it a plan file from terraform plan -out=tfplan with terraform apply tfplan. It won't ask for approval then, since it assumes you've already reviewed the plan. If we don't pass a plan, it does a new plan before executing it, which guarantees that the plan is done with the infrastructure we have right now. In this case it asks for interactive approval, unless we use the option -auto-approve.

Some resources behave in unexpected ways. For example, a change of a network configuration on an API Gateway can make it offline for some time (eg 15 minutes).

destroy

- Doc: https://developer.hashicorp.com/terraform/cli/commands/destroy

- https://developer.hashicorp.com/terraform/cli/run#destroying

Destroys all the resources. You do this seldomly in production, but frequently in a dev environment.

Be very careful when running terraform destroy -auto-approve. Make sure you are at the right directory! And only do it while developing.

It's an alias for terraform apply -destroy. You can also run terraform plan -destroy to show the proposed destroy changes without executing them, and then pass the destroy plan to apply.

See the Destroy planning mode.

Run destroy frequently while developing.

While developing some new infrastructure, instead of -or in addition to- doing incremental updates (eg create a VPC → apply → add subnets → apply → add security groups → apply...), it's a good practice to always do a destroy and then an apply after each update, re-creating the whole infrastructure from scratch.

This way we avoid cyclic dependencies, and we make sure that we can create the environment from scratch at any time.

Destroy can partially fail, that is, some resources may fail to be deleted, but the rest will. For example, if you have an S3 bucket that is not empty, it will fail to delete it, but the other resources in the plan will be gone.

fmt

- https://developer.hashicorp.com/terraform/cli/commands/fmt

- https://developer.hashicorp.com/terraform/language/style#code-formatting

terraform fmt

terraform fmt -check

validate

https://developer.hashicorp.com/terraform/cli/commands/validate

Checks syntax errors.

Requires a successful run of terraform init (i.e. local installation of all providers and modules) to function.

Gotcha: sometimes a single syntax error can generate multiple errors.

graph

https://developer.hashicorp.com/terraform/cli/commands/graph

Produces the Directed Acyclic Graph of resources, to see the objects dependencies.

If we run terraform graph it outputs the dependency graph as text, in the DOT language. Use https://dreampuf.github.io/GraphvizOnline to visualitze the graph.

To create the graph image locally, install Graphviz (use Homebrew) and run:

terraform graph -type=plan | dot -Tpng > graph.png

We can pass a plan file:

terraform graph -plan=tfplan | dot -Tpng > graph.png

output

https://developer.hashicorp.com/terraform/cli/commands/output

terraform output -raw ec2_public_ip

If the output is a command, eg:

output "ssh_connect" {

value = "ssh -i ec2_rsa ec2-user@${aws_instance.ec2.public_ip}"

}

We can execute it with backticks:

`terraform output -raw ssh_connect`

HCL

https://github.com/hashicorp/hcl

Files

Usually you have 3 files:

main.tf

variables.tf

outputs.tf

Blocks

https://developer.hashicorp.com/terraform/language/syntax/configuration

Everything is a block. Nothing can exist outside of a block.

A block has a type or keyword (terraform, resource, variable...) and optionally some labels, followed by curly braces, which delimit the body. Inside the curly braces (the body) we have arguments and nested blocks. (The HCL spec uses 'attribute' instead of 'argument' source.) Each argument has a name or key and a value. The value comes from an expression: a string literal, a boolean, a number, a variable, a function in HCL, a reference to an attribute of a resource or a data source, or a combination of them. See documentation

block_type "block_label_1" "block_label_2" {

# Body

# Argument

argument_name = "argument_value_from_expression"

nested_block {

}

}

Most blocks can appear multiple times (eg terraform, resource, variable, module...), but some can't, which should be detailed in the documentation.

Argument names, block type names, labels etc. are identifiers. An identifier can contain letters, digits, _ and -. However, the first character can only be a letter. source

Primitive types (scalars)

https://developer.hashicorp.com/terraform/language/expressions/type-constraints#primitive-types

- string

- Can only use double quotes (not single)

- boolean

- true or false

- number

- Can be an int or a float, there's only a single type for both

- null

- No value assigned

Terraform can coerce (cast) values of one type to another type automatically if a type is expected but you pass a different one. Some times it works and others it doesn't. See https://developer.hashicorp.com/terraform/language/expressions/type-constraints#conversion-of-primitive-types

All types: https://developer.hashicorp.com/terraform/language/expressions/types

Blocks

Top-level block types available in Terraform.

terraform

https://developer.hashicorp.com/terraform/language/terraform

Configures Terraform, the backend etc.

terraform {

required_version = "~> 1.9.2"

required_providers {

aws = {

source = "hashicorp/aws"

version = ">= 5.0"

}

}

}

Using variables in the terraform block

https://nulldog.com/terraform-s3-backend-variables-a-complete-guide

You can't reference any input variable inside the terraform block (source):

You can only use constant values in the

terraformblock. Arguments in theterraformblock cannot refer to named objects, such as resources and input variables. Additionally, you cannot use built-in Terraform language functions in the block.

So this is not allowed:

terraform {

backend "s3" {

bucket = var.state_bucket # Not allowed

}

}

However, you can have multiple terraform blocks, which helps overcoming this limitation, since you can create a file on the fly (using HereDoc for example) that contains an extra terraform block, in which you set the values.

You can also use environment variables with TF_VAR_ prefix to set the values of the variables (var.state_bucket) used in the terraform block:

export TF_VAR_state_bucket=mybucket

terraform init

You can also use Partial configuration:

terraform {

backend "s3" {

bucket = ""

key = ""

region = ""

profile= ""

}

}

With partial configuration you have 3 options to set the values.

- Use a configuration file (same format as

terraform.tfvars):

terraform init -backend-config="./state.config"

bucket = "your-bucket"

key = "your-state.tfstate"

region = "eu-central-1"

profile = "Your_Profile"

- Command-line key/value pairs:

terraform init \

-backend-config="bucket=your-bucket" \

-backend-config="key=path/to/your/state.tfstate" \

-backend-config="region=eu-central-1" \

-backend-config="profile=Your_Profile"

- Interactively

Terraform will prompt you to enter the values.

provider

https://developer.hashicorp.com/terraform/language/providers

Providers are what we use to interact with cloud vendors. It provides implementations of resources and data sources. Without providers, Terraform can't manage any kind of infrastructure.

See Providers below.

The provider block is optional and allows us to specify additional configuration.

terraform {

required_providers {

# This name (aws) can be anything, but the convention is to be the same than the source.

# Is the name used in the provider block below.

aws = {

source = "hashicorp/aws"

version = ">= 5.0"

}

}

}

provider "aws" {

region = "us-east-1"

}

We can have multiple instances of the provider block. For example, if we want to deploy resources in two different AWS regions we need two instances of the provider - see the resource provider meta-argument.

resource

https://developer.hashicorp.com/terraform/language/resources

What we create and manage. The heart of Terraform and the reason why it exists. Supports CRUD operations.

The first label is the resource type, and the second the resource name.

resource "type" "name" {

}

The resource name is used to identify multiple resources of the same type.

resource "aws_vpc" "web_vpc" {

cidr_block = "10.0.0.0/16"

}

The identifier of the resource is type.name, eg aws_vpc.web_vpc. This is how you reference this resource in the state. You can't have 2 resources with the same identifier (ie same type and name):

resource "aws_vpc" "web_vpc" {

}

# Not allowed

resource "aws_vpc" "web_vpc" {

}

The resource type (aws_vpc or aws_instance) always starts with the name of the provider and an underscore (aws_).

By default, Terraform interprets the initial word in the resource type name (separated by underscores) as the local name of a provider, and uses that provider's default configuration. source

By convention, resource type names start with their provider's preferred local name. source

Resource names are scoped to the module where they are defined, and not visible outside of it. To expose data from a module, use output blocks.

data source

https://developer.hashicorp.com/terraform/language/data-sources

Something that exists outside of our Terraform code that we want to get properties from, to pass them to our resources. A way to query the cloud provider's APIs for data.

A data source is read only, whereas resources support CRUD operations.

variable

https://developer.hashicorp.com/terraform/language/values/variables

An input or parameter. Variables make the code more reusable by avoiding to hardcode values.

Variables are also available in other tools that use HCL (unlike resource for example, which is Terraform specific).

variable "aws_region" {

description = "AWS region"

type = string

default = "us-east-1"

}

The identifier is var.name, eg var.aws_region. This is how you reference it.

If you don't specify a type, its type will be any. It's important to always specify the type to avoid errors.

Don't set a default value for sensitive values or things that need to be unique globally (like an S3 bucket).

To set a variable, you have these options (the latest ones will take precedence):

- If we don't pass any value, it uses the default value (if available).

- Use an environment variable named

TF_VAR_+ the variable name, egTF_VAR_aws_region.- In Unix use

export TF_VAR_aws_region=us-east-1.

- In Unix use

- Use a

terraform.tfvars,terraform.tfvars.json,*.auto.tfvarsor*.auto.tfvars.jsonfile.- Files are processed in this order, alphabetically. Multiple files can define the same variable, and the latest will take precedence.

- To use any other file name, use the

-var-fileCLI option, eg:terraform plan -var-file="prod.tfvars". - Do not store sensitive values in

tfvarsfiles that are checked in version control.

- Use the CLI options

-var 'aws_region=us-east-1'or-var-file="prod.tfvars". see docs - If no value was given with the previous options, you'll be prompted to supply the value interactively in the CLI.

- To avoid the interactive prompt to wait for an input in CI/CD pipelines, do

export TF_INPUT=0. Otherwise, it will wait indefinitely until the pipeline times out! If we setTF_INPUT=0it throws an error if a variable is missing.

- To avoid the interactive prompt to wait for an input in CI/CD pipelines, do

If we run terraform plan and we save the plan into a file, all the variables will be saved in the file and will be used when doing apply. But if we don't save the plan into a file, when we run apply, since it runs plan again, we'll need to supply the variables again somehow.

sensitive

To mask or avoid printing a sensitive value:

variable "db_password" {

description = "DB Password"

type = string

sensitive = true

}

nullable

By default is true, so null is accepted as a value.

variable "db_password" {

description = "DB Password"

type = string

nullable = false

}

In a module, if nullable is true (the default), when passing null Terraform doesn't use the default value. But when is false, Terraform uses the default value when a module input argument is null.

validation

https://developer.hashicorp.com/terraform/language/expressions/custom-conditions

variable "min_size" {

type = number

validation {

condition = var.min_size >= 0

error_message = "The min_size must be greater or equal than zero."

}

}

See Variable validation examples below.

output

https://developer.hashicorp.com/terraform/language/values/outputs

The oposite of an input variable. It returns data (the value) when we run apply or output.

output "instance_ip_addr" {

value = aws_instance.server.private_ip

}

sensitive

We can set the argument sensitive to true to avoid displaying it. In this case, we can use terraform output my_var to display it.

Important: the value is still stored in the state file, see Manage sensitive data in your configuration:

Terraform stores values with the

sensitiveargument in both state and plan files, and anyone who can access those files can access your sensitive values. Additionally, if you use theterraform outputCLI command with the-jsonor-rawflags, Terraform displays sensitive variables and outputs in plain text.

If the output references a sensitive input variable or resource, you need to add sensitive = true to indicate that you are intentionally outputting a secret, or use the nonsensitive function (see example), otherwise you get this error:

│ Error: Output refers to sensitive values

│

│ on main.tf line 23:

│ 23: output "password" {

│

│ To reduce the risk of accidentally exporting sensitive data that was intended to be only internal, Terraform requires that any root module output containing sensitive data be explicitly

│ marked as sensitive, to confirm your intent.

│

│ If you do intend to export this data, annotate the output value as sensitive by adding the following argument:

│ sensitive = true

ephemeral

locals

https://developer.hashicorp.com/terraform/language/block/locals

https://developer.hashicorp.com/terraform/language/values/locals

Since all code in HCL must be inside a block, we use the locals block to define values, manipulate data etc. To avoid repetition, they allow reusing an expression within a module.

module

https://developer.hashicorp.com/terraform/language/block/module

https://developer.hashicorp.com/terraform/language/modules

Other code written in HCL that's reusable and we call from our code. A library.

ephemeral resources

Introduced in Terraform 1.10 and Terraform 1.11.

https://developer.hashicorp.com/terraform/language/block/ephemeral

https://developer.hashicorp.com/terraform/language/manage-sensitive-data#use-the-ephemeral-block

https://developer.hashicorp.com/terraform/language/manage-sensitive-data/ephemeral

https://developer.hashicorp.com/terraform/language/manage-sensitive-data/write-only

Example: https://github.com/hashicorp-education/learn-terraform-rds-upgrade/blob/main/main.tf - Tutorial https://developer.hashicorp.com/terraform/tutorials/aws/rds-upgrade - Uses Systems Manager Parameter Store (SSM)

Ephemeral values are available at the run time of an operation, but Terraform omits them from state and plan files. Because Terraform does not store ephemeral values, you must capture any generated values you want to preserve in another resource or output in your configuration.

You can only reference an ephemeral resource in other ephemeral contexts, such as a write-only argument in a managed resource or provider blocks (see this and this). The provider uses the write-only argument value to configure the resource, then Terraform discards the value without storing it. Note that write-only arguments accept both ephemeral and non-ephemeral values.

Terraform does not store write-only arguments in state files, so Terraform has no way of knowing if a write-only argument value has changed. However, providers typically include version arguments (password_wo_version) alongside write-only arguments (password_wo). Terraform stores version arguments in state, and can track if a version argument changes and trigger an update of a write-only argument.

Example from https://developer.hashicorp.com/terraform/language/manage-sensitive-data/write-only#set-and-store-an-ephemeral-password-in-aws-secrets-manager and https://developer.hashicorp.com/terraform/language/manage-sensitive-data/ephemeral#write-only-arguments:

# Used to create the password without storing it in state, passing the value to

# the write-only argument secret_string_wo of the aws_secretsmanager_secret_version

# resource only during the apply phase.

ephemeral "random_password" "db_password" {

length = 16

override_special = "!#$%&*()-_=+[]{}<>:?"

}

resource "aws_secretsmanager_secret" "db_password" {

name = "db_password"

}

resource "aws_secretsmanager_secret_version" "db_password" {

secret_id = aws_secretsmanager_secret.db_password.id

secret_string_wo = ephemeral.random_password.db_password.result

# Terraform stores the aws_db_instance's password_wo_version argument value in state and can

# track if it changes. Increment this value when an update to the password is required

secret_string_wo_version = 1

}

# Used to retrieve the password from AWS Secrets Manager and pass it to the password_wo

# argument of the aws_db_instance.

ephemeral "aws_secretsmanager_secret_version" "db_password" {

secret_id = aws_secretsmanager_secret_version.db_password.secret_id

}

resource "aws_db_instance" "example" {

password_wo = ephemeral.aws_secretsmanager_secret_version.db_password.secret_string

# Terraform stores the password_wo_version argument value in state and can track if it changes.

# Increment the password_wo_version value when an update to password_wo is required.

password_wo_version = aws_secretsmanager_secret_version.db_password.secret_string_wo_version

}

Instead of doing all this, consider using manage_master_user_password to enable managing the master password with Secrets Manager. See Password management with Amazon RDS and AWS Secrets Manager.

If you get this error when running terraform apply:

│ Error: reading AWS Secrets Manager Secret Versions Data Source (<null>): couldn't find resource

│

│ with module.rds.ephemeral.aws_secretsmanager_secret_version.db_password,

│ on ../../modules/rds/password.tf line 27, in ephemeral "aws_secretsmanager_secret_version" "db_password":

│ 27: ephemeral "aws_secretsmanager_secret_version" "db_password" {

│

│ couldn't find resource

The issue is that we're trying to read the secret from AWS Secrets Manager (with ephemeral "aws_secretsmanager_secret_version") immediately after creating it.

The solution is to delete the ephemeral "aws_secretsmanager_secret_version" resource and use the ephemeral "random_password" result directly instead of reading it back from Secrets Manager:

# Remove this:

ephemeral "aws_secretsmanager_secret_version" "db_password" {

secret_id = aws_secretsmanager_secret_version.db_password.secret_id

}

resource "aws_db_instance" "example" {

# Change this:

password_wo = ephemeral.aws_secretsmanager_secret_version.db_password.secret_string

# To this:

password_wo = ephemeral.random_password.db_password.result

}

If you reference an ephemeral resource in locals block that value is also not stored in state or plan files because Terraform intuitively understands you do not want that. Example from https://developer.hashicorp.com/terraform/language/block/ephemeral#fundamental-ephemeral-resource:

ephemeral "aws_secretsmanager_secret_version" "db_master" {

secret_id = aws_secretsmanager_secret_version.db_password.secret_id

}

locals {

credentials = jsondecode(ephemeral.aws_secretsmanager_secret_version.db_master.secret_string)

}

provider "postgresql" {

username = local.credentials["username"]

password = local.credentials["password"]

}

Each provider defines the available ephemeral resource blocks and write-only arguments:

- Random provider

- AWS provider

- https://registry.terraform.io/providers/hashicorp/aws/latest/docs/ephemeral-resources/ecr_authorization_token

- https://registry.terraform.io/providers/hashicorp/aws/latest/docs/ephemeral-resources/kms_secrets

- https://registry.terraform.io/providers/hashicorp/aws/latest/docs/ephemeral-resources/lambda_invocation

- https://registry.terraform.io/providers/hashicorp/aws/latest/docs/ephemeral-resources/ssm_parameter

- https://registry.terraform.io/providers/hashicorp/aws/latest/docs/ephemeral-resources/secretsmanager_random_password

- https://registry.terraform.io/providers/hashicorp/aws/latest/docs/ephemeral-resources/secretsmanager_secret_version

list resources

New in Terraform 1.14 (November 2025): https://github.com/hashicorp/terraform/releases/tag/v1.14.0

https://developer.hashicorp.com/terraform/plugin/framework/list-resources

https://developer.hashicorp.com/terraform/language/block/tfquery/list

List resources allow to search for a specific resource like VPCs, subnets and EC2 instances. You can only add list blocks to configuration files with .tfquery.hcl extensions. Searching is invoked via the terraform query command.

- https://registry.terraform.io/providers/hashicorp/aws/latest/docs/list-resources/cloudwatch_log_group

- https://registry.terraform.io/providers/hashicorp/aws/latest/docs/list-resources/instance

- https://registry.terraform.io/providers/hashicorp/aws/latest/docs/list-resources/subnet

- https://registry.terraform.io/providers/hashicorp/aws/latest/docs/list-resources/vpc

- https://registry.terraform.io/providers/hashicorp/aws/latest/docs/list-resources/iam_policy

- https://registry.terraform.io/providers/hashicorp/aws/latest/docs/list-resources/iam_role

- https://registry.terraform.io/providers/hashicorp/aws/latest/docs/list-resources/iam_role_policy_attachment

action

New in Terraform 1.14 (November 2025): https://github.com/hashicorp/terraform/releases/tag/v1.14.0

- https://registry.terraform.io/providers/hashicorp/aws/latest/docs/actions/cloudfront_create_invalidation

- https://registry.terraform.io/providers/hashicorp/aws/latest/docs/actions/dynamodb_create_backup

- https://registry.terraform.io/providers/hashicorp/aws/latest/docs/actions/ec2_stop_instance

- https://registry.terraform.io/providers/hashicorp/aws/latest/docs/actions/events_put_events (EventBridge)

- https://registry.terraform.io/providers/hashicorp/aws/latest/docs/actions/lambda_invoke

- https://registry.terraform.io/providers/hashicorp/aws/latest/docs/actions/sns_publish

Meta-arguments

Arguments that are built-in into the language, as opposed to arguments defined by the providers. They are available to every resource, data source and module - nothing else.

resource provider

- https://developer.hashicorp.com/terraform/language/meta-arguments/resource-provider

- https://developer.hashicorp.com/terraform/language/meta-arguments/module-providers

Allows us to distinguish multiple instances of a provider.

For example, to deploy to multiple AWS regions we need multiple provider "aws" instances:

# The default. Will be used when a resource doesn't specify a 'provider'

provider "aws" {

region = "us-east-1"

}

provider "aws" {

region = "us-west-1"

alias = "california"

}

resource "aws_vpc" "myvpc" {

provider = aws.california

}

# On a module we use a map

module "example" {

providers = {

aws = aws.california

}

}

We can also use it when doing VPC Peering or Transit Gateway and the VPCs are in different accounts, and we need to get information from multiple AWS accounts. You should rarely use multiple accounts though; try to use a single account.

Important:

- Do not specify an

aliasfor the first instance of the provider. Only do it for the second or third. This way we avoid addingproviderin many places. - The default instance should be the one that is used the most. The instance with an

aliasshould be used for a few resources only.

lifecycle

https://developer.hashicorp.com/terraform/language/meta-arguments/lifecycle

It's a nested block.

For example, when we want to change the configuration of an EC2 virtual machine (eg change the AMI), by default Terraform is going to first kill the existing VM, and then create a new one, resulting in some downtime. We can avoid this by using create_before_destroy, which tells Terraform to create a new VM before we kill the existing one.

depends_on

https://developer.hashicorp.com/terraform/language/meta-arguments/depends_on

Should be used rarely. Ask yourself if you really need it.

count

https://developer.hashicorp.com/terraform/language/meta-arguments/count

How many instances of the resource or module to create.

All instances should be almost identical, otherwise is safer to use for_each. See When to Use for_each Instead of count.

A resource can only use count or for_each, but not both.

for_each

https://developer.hashicorp.com/terraform/language/meta-arguments/for_each

Similar to count, is also used to create multiple instances of a resource or module, but we can easily set different properties to each one in a safer way.

State

- https://developer.hashicorp.com/terraform/language/state

- https://developer.hashicorp.com/terraform/cli/state

- https://developer.hashicorp.com/terraform/cli/commands/state

https://www.gruntwork.io/blog/how-to-manage-terraform-state

Primarily, the state binds remote objects with resources declared in our configuration files.

State is stored in a JSON file:

{

"version": 4, // Version of the Terraform state schema

"terraform_version": "1.9.5", // Version of Terraform that last modified the state

"serial": 79, // Version of this state file. Incremented every time we update the state

"lineage": "8e16b7bb-3593-c363-6bf9-bde5a11ad86a",

"outputs": {

"bucket_name": {

"value": "session2-bucket-xu0th",

"type": "string"

}

},

"resources": [],

"check_results": null

}

It contains the Directed Acyclic Graph of resources; see the "dependencies" array.

State contains sensitive values like passwords and private keys, so it needs to be stored securely. See Sensitive Data in State. This is one reason why we don't commit the state in our Git repositories.

Another reason to not commit the state into version control is that we usually want to deploy multiple instances of our infrastructure (dev, test, staging, prod). Each environment requires it's own state file, which can live outside of our repo, decoupled from our code.

Why do we need a state file

Why do we need a state file (terraform.tfstate) if we already have the infrastructure in the cloud and we can query it? For various reasons:

- Terraform doesn't manage all the resources in a cloud (AWS, Azure...) account, but only a subset of it. You can have many more resources, which may also be managed by other Terraform projects, or created manually or using the API. The state file records which resources are managed by a Terraform projects.

- The state file maps the resources in your configuration to the resources in the cloud, for example, by recording their IDs.

- State allows Terraform to detect drift (changes manually done directly to the cloud resources). This cannot be detected by comparing the configuration files with the cloud resources, because there's no way to tell if you want to change a resource or instead is drift; you need a three-way comparison.

- By having the information of the resources cached locally, Terraform doesn't need to query the cloud provider to obtain the information every time it needs it, which would be time consuming and would probably by rate-limited due to making hundreds of API calls.

- Not all resource information is defined in your Terraform code. Some information, for example the IDs of EC2 instances, is generated by the cloud providers after creating the resources, so you need to record it outside of the configuration. In addition, it's not always possible to recover all the information using the cloud APIs after creating a resource, so you need to record it at creation time.

- State allows you to import existing resources from the cloud.

- State records dependencies between objects. This is necessary for example when deleting resources, since the configuration doesn't have that resources anymore, but Terraform needs to know what resources needs to delete, and in which order.

See more reasons at:

Bootstrapping Microservices section 7.8.7, page 217.

Purpose of Terraform State - https://developer.hashicorp.com/terraform/language/state/purpose

Why a state file is required? - https://stackoverflow.com/questions/74420611/terraform-why-a-state-file-is-required

Why is it required to persist terraform state file remotely? - https://stackoverflow.com/questions/54855030/why-is-it-required-to-persist-terraform-state-file-remotely

If you lose the state you will end up with orphaned resources that are not being managed by Terraform.

State backend (storage)

https://developer.hashicorp.com/terraform/language/settings/backends/configuration

By default the state file terraform.tfstate is stored locally using the local backend. This is OK for development, but not for anything serious since:

- Is not backed up, so we can loose it. This is really bad since our state is crucial to use Terraform.

- Is not accessible to others, so we can't collaborate with other people.

It's better to store the state remotely with a state backend like S3 or HCP Terraform.

Usually you need to install a provider to talk to a cloud provider like AWS or Azure using their APIs. However, Terraform knows how to use (for example) S3 or Google Cloud Storage APIs for the purpose of storing state, without installing any provider. Note that we can deploy resources to Azure (using the Azure provider) and use S3 for the state backend (without a provider) at the same time.

Migrate backend state

https://developer.hashicorp.com/terraform/tutorials/cloud/migrate-remote-s3-backend-hcp-terraform

If we change the backend (eg from local to S3) we need to run terraform init. Terraform will ask us to move any existing local state to the new backend, and we need to answer 'yes':

$ terraform init

Initializing the backend...

Do you want to copy existing state to the new backend?

Pre-existing state was found while migrating the previous "local" backend to the

newly configured "s3" backend. No existing state was found in the newly

configured "s3" backend. Do you want to copy this state to the new "s3"

backend? Enter "yes" to copy and "no" to start with an empty state.

Enter a value: yes

Successfully configured the backend "s3"! Terraform will automatically

use this backend unless the backend configuration changes.

This creates a new state file object in the S3 bucket.

Often backends don't allow you to migrate state straight from another backend (eg S3 → GCS), so you need to migrate the existing remote state to local first, and then to the new backend (S3 → local → GCS).

To move a remote state back to local use terraform init -migrate-state, which reconfigures the backend and attempts to migrate any existing state, prompting for confirmation (answer 'yes'). This creates a local terraform.tfstate file. There's also the -force-copy option that suppresses these prompts and answers "yes" to the migration questions.

$ terraform init -migrate-state

Terraform has detected you're unconfiguring your previously set "s3" backend.

Do you want to copy existing state to the new backend?

Pre-existing state was found while migrating the previous "s3" backend to the

newly configured "local" backend. No existing state was found in the newly

configured "local" backend. Do you want to copy this state to the new "local"

backend? Enter "yes" to copy and "no" to start with an empty state.

Enter a value: yes

Successfully unset the backend "s3". Terraform will now operate locally.

State locking

https://developer.hashicorp.com/terraform/language/state/locking

To prevent concurrent updates to the state, Terraform supports locking. When the state is locked, Terraform won't run. When the state is stored locally, Terraform creates a lock file .terraform.tfstate.lock.info so that two processes don't update the state concurrently - although the chances that this happens are very low. Remote backends really need to support locking, otherwise the state file can be corrupted or have conflicts due to race conditions. Not all remote backends support locking. HCP Terraform is a good choice.

Modify state

You should never manually edit the state file. Instead, use the state command subcommands:

terraform state -help: list thestatesubcommands.terraform state list: list all the resources.terraform state show <resource_id>: show attributes of a resource.terraform state mv <old_id> <new_id>: to change the resource identifier.- When we change a resource name, Terraform deletes the existing resource and creates a new one. To avoid this, we can use

mvby telling Terraform that is the same resource. - Required for resources that have a configuration or data that needs to be preserved.

- Alternatively, we can use the

movedblock to do this too.

- When we change a resource name, Terraform deletes the existing resource and creates a new one. To avoid this, we can use

terraform state rm <resource_id>: removes a resource from the state only; it doesn't delete the actual resource in the cloud.- Used when we extract a part of our Terraform code to a different Git repository. Or when we want to deploy a new instance of a resource, but at the same time keep the old one to investigate (forensics) or check something on it.

- Alternatively, we can use the

removedblock to do this too.

Use these commands to address some state drift. For example, if a security vulnerability was fixed outside of Terraform, so that Terraform doesn't try to undo the changes.

When we run terraform state list, if the resource has square brackets (eg module.bucket.aws_s3_bucket.this[0]), it means that the module is using count internally, and may create multiple instances (including zero) of the resource, so an index is required to specify which one is it:

resource "aws_s3_bucket" "this" {

count = local.create_bucket ? 1 : 0

}

Import resources into state

We can use terraform import to grab resources that have been deployed and add them into the state. The resources can be things that already existed before we started using Terraform, for example.

Some resources can't be imported, which should be detailed in the provider documentation for that resource.

Resources need to be imported one a time, but we can create a script to import many.

The command doesn't write any HCL, so we are responsible for updating the code.

terraform import workflow:

- We have some resources that already exist in the infrastructure, but not in our state.

- We run

terraform importto gather the resources, and commit them into the state.- The command depends on the resource and the provider. For example, to import an EC2 instance we use

terraform import aws_instance.web i-12345678according to the docs. And to import a VPC we doterraform import aws_vpc.web_vpc vpc-0eaee8c907067a57be. - Once the resources are imported, the state is aware of them, but we still don't have the code for them.

- The command depends on the resource and the provider. For example, to import an EC2 instance we use

- We write the code that matches the state.

- Most likely this code is not perfect, except for very simple resources, so we'll need to adjust it.

- Run

terraform planand if there are changes, update the code iteratively untilterraform plangives no changes.- Most likely, the first time we run

planit will say that we have some delta/gap, ie some changes to apply to the infrastructure. But we don't want to apply this changes. Instead, we need to revise the code we've written to incorporate the gap, and runplanagain. We do this iteratively until there's no changes.

- Most likely, the first time we run

- Finally, when

terraform plandoesn't list any change, runterraform apply.- Even though there are no changes, this updates the state file (eg it increments the "serial" number).

Because executions of the import command are not recorded in version control, there's a new import block which we can write and commit in version control. It can also generate the HCL code using terraform plan, although you'll need to edit it because it can have hardcoded values.

Replace a resource

- https://developer.hashicorp.com/terraform/tutorials/state/state-cli#replace-a-resource-with-cli

- https://developer.hashicorp.com/terraform/cli/commands/plan#replace-address

terraform plan -replace=random_string.s3_bucket_suffix

terraform apply -replace=random_string.s3_bucket_suffix

See example of replacing an EC2 instance to launch a new instance with tags here (terraform apply -replace aws_autoscaling_group.example): https://developer.hashicorp.com/terraform/tutorials/aws/aws-default-tags#propagate-default-tags-to-auto-scaling-group

Providers

https://developer.hashicorp.com/terraform/language/providers

A provider is a cloud platform (AWS, Azure, GCP...), a service (Cloudflare, Akamai, CockroachDB, GitLab, DataDog...) or a tool (Kubernetes, Helm...).

Without providers, Terraform can't manage any kind of infrastructure.

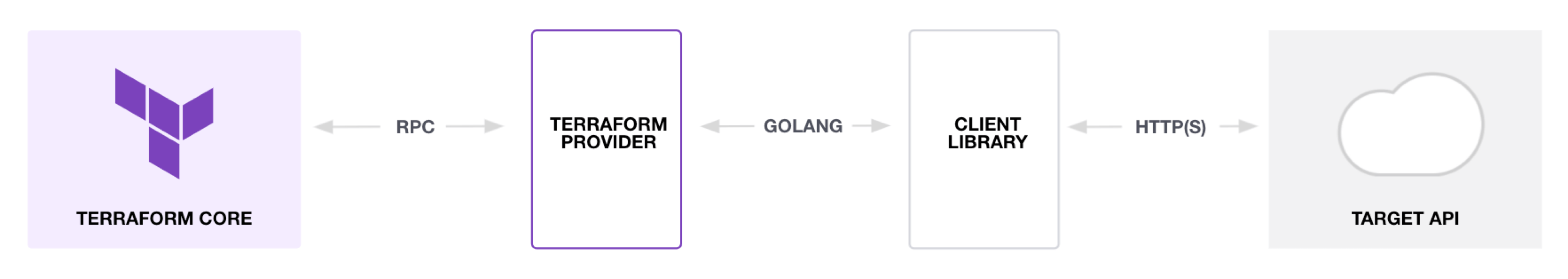

Providers are plugins of the Terraform core (the terraform binary). The core installs the provider binaries (with terraform init) and communicates with providers using RPC. In turn, providers talk to cloud services using their APIs.

Providers written in Go using the Terraform Plugin Framework SDK or the old Terraform Plugin SDKv2.

Providers define the resources and data sources available. Data sources are read only, whereas the resources support CRUD operations. When a provider implements a resource, it needs to implement a set of functions in Go (Create, Read, Update, Delete...) - see docs.

Each provider has a prefix used in its resources and data sources. For example, the AWS provider uses the prefix aws_ (aws_instance, aws_vpc), the Azure provider uses azurerm_ (azurerm_virtual_machine, azurerm_resource_group).

Usually providers are pulled from the Terraform Registry, where you have the documentation and versions available. There are other provider registries. At this registry there are 4 provider tiers (source):

- Official: owned and maintained by HashiCorp. They use the

hashicorpnamespace. - Partner: owned and maintained by third-party companies against their own APIs. They must participate in the HashiCorp Technology Partner Program.

- Community: published by individuals or organizations.

- Archived: deprecated or no longer maintained, but still available so that your code can still function.

Be careful with community providers. A provider can do anything with your authentication credentials! It can contain malicious code and send information (for example, about your infrastructure) to anyone.

We can use multiple providers together. This is an advantage of Terraform over other tools like CloudFormation, since we can define (for example) AWS resources using the hashicorp/aws provider and then deploy third party tools and software onto it using other providers from Red Hat or Palo Alto Networks. In addition, we can combine AWS services with other services running outside AWS like CloudFlare or Datadog in the same code.

It's not mandatory to specify the providers with required_providers, but it allows us to use version constraints. A provider version is optional; if omitted, it uses the latest one.

Modules

https://developer.hashicorp.com/terraform/language/modules

https://developer.hashicorp.com/terraform/language/block/module

Reusable configuration. Like a library or package in other languages. To create resources in a repeatable way.

A module is an opinionated collection of resources, which are tightly coupled and should be deployed together.

All Terraform code is a module. The main directory where you run plan and apply is the root module, which calls other modules, the child modules. A child module can also call its own nested child module.

We use variables to pass data into the module, and outputs to get data out from it.

We do not generally specify provider blocks within a module, we simply allow them to pass through from the root.

You need to run terraform init when you add a new module, remove a module, modify the source or version of a module, or checkout a repository that contains module. Doing terraform init downloads modules, caches them in the .terraform/modules directory and updates the dependency lock file (.terraform.lock.hcl) if needed.

Providers extend Terraform, and modules extend providers.

In Terraform, there are two forms of modularity, the provider (written in Go) and modules (written in HCL). source

Advantages:

- Reusability: write infrastructure code once and use it multiple times across different projects or environments.

- Maintainability: update infrastructure in one place instead of many.

- Encapsulation: hide a lot of resource details and complexity inside a module and expose only a simple interface using variables and outputs.

- Standardization: organizations can enforce best practices and patterns by providing prebuilt modules (eg a secure VPC or a compliant S3 bucket).

- Organization: group related resources together logically (eg all VPC components).

When Should We Write Modules - https://dustindortch.com/2022/10/21/why-do-we-write-terraform-modules/

Terraform Best Practices: Defining Modules - https://dustindortch.com/2024/03/27/terraform-best-pratices-defining-modules

Witnessing many learners of Terraform, there is a pattern where they create their first module and then go crazy writing modules for everything. Creating a module for an Azure Resource Group is one that takes things to an extreme.

Modules for Complete Deployments. These should often be avoided, as well. Such a pattern goes a bit overboard on opinionation. The more opinionated a module is the lower the flexibility is. I have also created and watched others create a module that deploys an entire hub-and-spoke architecture. These sorts of modules do too much. Not only do they make the code less flexible, they also inflate the number of resources managed by the state. While deployment can be impressive, a failure can be equally impressive.

Terraform Module Best Practices: A Complete Guide - https://devopscube.com/terraform-module-best-practices/

https://www.gruntwork.io/blog/how-to-create-reusable-infrastructure-with-terraform-modules

When creating a module, you should always prefer using separate resources. The advantage of using separate resources is that they can be added anywhere, whereas an inline block can only be added within the module that creates a resource. So using solely separate resources makes your module more flexible and configurable.

Google Cloud - Best practices for reusable modules - https://docs.cloud.google.com/docs/terraform/best-practices/reusable-modules

Source

Modules can live (source) locally, in a Git repository or a registry like https://registry.terraform.io/browse/modules.

module "rds_read_replica" {

source = "./modules/rds"

}

Note that only if the module comes from a registry we can specify a version constraint:

# https://github.com/terraform-aws-modules/terraform-aws-eks

module "eks" {

source = "terraform-aws-modules/eks/aws"

version = "~> 21.0"

}

If it comes from Git, we can use the HEAD, or use the ?ref= query param to specify either a tag, a commit SHA or a branch:

module "vpc" {

source = "git::https://example.com/vpc.git" # HEAD

}

module "vpc" {

source = "git::https://example.com/vpc.git?ref=v1.2.0"

}

module "vpc" {

source = "git::https://example.com/vpc.git?ref=51d462976d84fdea54b47d80dcabbf680badcdb8"

}

module "vpc" {

source = "git::https://example.com/vpc.git?ref=someBranch"

}

Using Git sources allows you to consume a module directly from the source repository, without publishing it to a registry. This can be useful if we need to use a version that the maintainer hasn't published to the registry yet. It's also a way to have private modules without using a private registry, since it works with private Git repos (with SSH or HTTPS + tokens).

Module structure

https://developer.hashicorp.com/terraform/language/modules/develop/structure

Usually you have 3 files:

main.tf

variables.tf

outputs.tf

We can also have an example.tfvars file.

Module example

modules/

├── ec2_instance/

│ ├── main.tf

│ ├── variables.tf

│ └── outputs.tf

└── main.tf

Child module:

variable "instance_name" {

type = string

description = "Name of the EC2 instance"

}

variable "instance_type" {

type = string

default = "t2.micro"

description = "EC2 instance type"

}

resource "aws_instance" "this" {

ami = "ami-0c55b159cbfafe1f0" # Amazon Linux (note that is region-specific)

instance_type = var.instance_type

tags = {

Name = var.instance_name

}

}

output "instance_id" {

value = aws_instance.this.id

}

output "public_ip" {

value = aws_instance.this.public_ip

}

Root module (where you run terraform plan etc.):

provider "aws" {

region = "us-east-1"

}

module "web_server" {

source = "./modules/ec2_instance"

instance_name = "web-server"

instance_type = "t2.xlarge"

}

output "web_server_public_ip" {

value = module.web_server.public_ip

}

Define providers in root modules

https://developer.hashicorp.com/terraform/language/modules/develop/providers

From https://docs.cloud.google.com/docs/terraform/best-practices/reusable-modules#providers-backends

Shared modules must not configure providers or backends. Instead, configure providers and backends in root modules.

For shared modules, define the minimum required provider versions in a

required_providersblock.

Modules which contain their own local provider configuration blocks are considered legacy modules. Legacy modules can't use the count, for_each, or depends_on arguments (source 1, source 2, source 3). You get this error:

│ The module at module.lb_controller is a legacy module which contains its own local provider configurations, and so calls to it may not use the count, for_each, or depends_on arguments. │ │ If you also control the module "../../modules/lb-controller", consider updating this module to instead expect provider configurations to be passed by its caller.

There are two ways to pass a provider from a root module to a child module:

- Implicit: by not defining any

providerblock in the child module. The child module automatically inherits the provider configuration from the root module. - Explicit: by using the

providersargument in themoduleblock. This is needed when the root module has multiple instances of a provider (withalias), and we want to specify which one to use in the child module.- Setting a

providersargument within amoduleblock overrides the default inheritance. - Additional provider configurations (those with the

alias) are never inherited automatically by child modules, and so must always be passed explicitly using theprovidersmap.

- Setting a

Only provider configurations (eg AWS region) are inherited by child modules, not provider source or version requirements. Each module must declare its own provider requirements using a required_providers block. This is especially important for non-HashiCorp providers. source

Implicit

terraform {

required_version = "~> 1.14"

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 6.26.0"

}

}

}

provider "aws" {

region = var.aws_region

default_tags {

tags = var.default_tags

}

}

terraform {

required_version = ">= 1.14"

required_providers {

aws = {

source = "hashicorp/aws"

version = ">= 6.26.0"

}

}

}

Note that the root module specifies the maximum version using ~>, whereas the child module declares the minimum provider version it is known to work with using >=. From Best Practices for Provider Versions:

A module intended to be used as the root of a configuration — that is, as the directory where you'd run

terraform apply— should also specify the maximum provider version it is intended to work with, to avoid accidental upgrades to incompatible new versions.

Do not use

~>(or other maximum-version constraints) for modules you intend to reuse across many configurations, even if you know the module isn't compatible with certain newer versions. Doing so can sometimes prevent errors, but more often it forces users of the module to update many modules simultaneously when performing routine upgrades. Specify a minimum version, document any known incompatibilities, and let the root module manage the maximum version.

Explicit with a single provider in the child module

terraform {

required_version = "~> 1.14"

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 6.26.0"

}

}

}

# The default configuration is used when no explicit provider instance is set

provider "aws" {

region = "us-east-1"

}

provider "aws" {

alias = "us-west-1"

region = "us-west-1"

}

module "bucket_us_east_1" {

source = "./modules/s3_bucket"

}

module "bucket_us_west_1" {

source = "./modules/s3_bucket"

providers = {

# Set the default (unaliased) provider

# All resources defined in the module will use this provider

aws = aws.us-west-1

}

}

Explicit with multiple providers in the child module

Using an alternate provider configuration in a child module - https://developer.hashicorp.com/terraform/language/block/provider#using-an-alternate-provider-configuration-in-a-child-module

terraform {

required_version = "~> 1.14"

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 6.26.0"

}

}

}

provider "aws" {

region = var.aws_region

}

# Provider for ACM certificate (must be in us-east-1 for CloudFront)

provider "aws" {

alias = "us_east_1"

region = "us-east-1"

}

module "web_hosting" {

source = "../../modules/web-hosting"

providers = {

# We only set the aliased provider, the default is inherited implicitly.

# Inside the module, the resources that don't specify a provider explicitly

# will use the default one

aws.us_east_1 = aws.us_east_1

}

}

module "github_actions_oidc" {

source = "../../modules/github-actions-oidc"

# No need to pass the default provider, it is inherited implicitly

}

terraform {

required_version = ">= 1.14"

required_providers {

aws = {

source = "hashicorp/aws"

version = ">= 6.26.0"

configuration_aliases = [ aws.us_east_1 ] # <-- important

}

}

}

resource "aws_s3_bucket" "web_hosting" {

# Uses the default provider inherited from the root module

bucket = "${var.app_name}-web-hosting-${local.account_id}-${var.environment}"

}

# ACM certificate must be created in us-east-1 for CloudFront

resource "aws_acm_certificate" "web_hosting" {

# We only set the provider explicitly when we need a different one than the default

provider = aws.us_east_1

domain_name = var.domain_name

}

configuration_aliases is used in a child module to declare that it requires multiple configurations for the same provider. It forces the parent module to pass a specific provider instance for that alias.

We can define multiple provider alias in configuration_aliases if needed, like in this example from https://developer.hashicorp.com/terraform/language/modules/develop/providers#passing-providers-explicitly:

provider "aws" {

alias = "usw1"

region = "us-west-1"

}

provider "aws" {

alias = "usw2"

region = "us-west-2"

}

module "tunnel" {

source = "../../modules/tunnel"

providers = {

aws.src = aws.usw1

aws.dst = aws.usw2

}

}

terraform {

required_providers {

aws = {

source = "hashicorp/aws"

version = ">= 6.26.0"

configuration_aliases = [ aws.src, aws.dst ]

}

}

}

Publish a module at the registry

The repository needs to be hosted in GitHub. The repository name needs to follow this pattern: terraform-<provider>-<name>, for example terraform-aws-ec2. The <provider> is the main provider that the module uses in case that there is more than one.

Module composition

https://developer.hashicorp.com/terraform/language/modules/develop/composition

When we introduce

moduleblocks, our configuration becomes hierarchical rather than flat: each module contains its own set of resources, and possibly its own child modules, which can potentially create a deep, complex tree of resource configurations.However, in most cases we strongly recommend keeping the module tree flat, with only one level of child modules

Share data between modules

Dependency Inversion - https://developer.hashicorp.com/terraform/language/modules/develop/composition#dependency-inversion

Data-only modules

https://developer.hashicorp.com/terraform/language/modules/develop/composition#data-only-modules

It may sometimes be useful to write modules that do not describe any new infrastructure at all, but merely retrieve information about existing infrastructure that was created elsewhere using data sources.

A common use of this technique is when a system has been decomposed into several subsystem configurations but there is certain infrastructure that is shared across all of the subsystems, such as a common IP network.

terraform_remote_state

https://developer.hashicorp.com/terraform/language/state/remote-state-data

You can use the terraform_remote_state data source to read outputs from another module's state file. This is useful when modules are deployed separately. The other module can be in a different Git repository.

provider "aws" {

region = "us-east-1"

}

data "terraform_remote_state" "networking" {

backend = "s3"

config = {

bucket = "my-terraform-states"

key = "networking/dev/terraform.tfstate"

region = "us-east-1"

}

}

module "payments" {

source = "../../modules/payments"

environment = "dev"

region = "us-east-1"

db_instance_class = "db.t3.micro"

vpc_id = data.terraform_remote_state.networking.outputs.vpc_id

private_subnet_ids = data.terraform_remote_state.networking.outputs.private_subnet_ids

}

Note that you have access to all the state:

Sharing data with root module outputs is convenient, but it has drawbacks. Although

terraform_remote_stateonly exposes output values, its user must have access to the entire state snapshot, which often includes some sensitive information.

Docs recommend publishing the data somewhere else:

When possible, we recommend explicitly publishing data for external consumption to a separate location instead of accessing it via remote state.

A key advantage of using a separate explicit configuration store instead of

terraform_remote_stateis that the data can potentially also be read by systems other than Terraform...

And also recommend using data sources (like aws_ssm_parameter) and a data-only module:

You can encapsulate the implementation details of retrieving your published configuration data by writing a data-only module containing the necessary data source configuration and any necessary post-processing such as JSON decoding. You can then change that module later if you switch to a different strategy for sharing data between multiple Terraform configurations.

Version constraints

It's recommended to pin modules to a specific major and minor version, and to set a minimum required version of the Terraform binary, see https://developer.hashicorp.com/terraform/language/style#version-pinning

From https://docs.cloud.google.com/docs/terraform/best-practices/root-modules#minor-provider-versions:

In root modules, declare each provider and pin to a minor version (eg

version = "~> 4.0.0"). This allows automatic upgrade to new patch releases while still keeping a solid target. For consistency, name the versions fileversions.tf.

https://developer.hashicorp.com/terraform/language/expressions/version-constraints

https://developer.hashicorp.com/terraform/language/providers/requirements#version-constraints

Tutorial - Lock and Upgrade Provider Versions - https://developer.hashicorp.com/terraform/tutorials/configuration-language/provider-versioning

terraform {

required_version = "~> 1.7" # Uses 1.9.5

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.0" # Uses 5.65.0

}

}

}

Examples:

= 2.0.0or2.0.0: exactly 2.0.0. Is the default when there's no operator!= 2.0.0: exclude exactly 2.0.0. Use it when there's a bug or issue with some specific version> 1.2.0: 1.2.1 or 1.3, but not 1.2.0>= 1.2.0>= 1.2.0, < 2.0.0"- Pessimistic operator: allows only the rightmost version component to increment

~> 1.2.0: allows 1.2.0 and 1.2.1 but not 1.3~> 1.2: allows 1.2 and 1.3 but not 2.0. Equivalent to>= 1.2.0, < 2.0.0"

For modules we can only use version constraints when they are sourced from a registry, but not when locally or from a Git repository.

To upgrade provider versions use terraform init -upgrade. It picks the latest version that meets the version constraints set in the code.

If we don't specify a version it uses the latest one.

Variable validation examples

Examples here are general, see AWS-specific examples here.

https://dev.to/drewmullen/terraform-variable-validation-with-samples-1ank

String that allows only specific values:

variable "node_capacity_type" {

description = "Capacity type for the node group (ON_DEMAND or SPOT)"

type = string

validation {

condition = contains(["ON_DEMAND", "SPOT"], var.node_capacity_type)

error_message = "node_capacity_type must be ON_DEMAND or SPOT"

}

}

# Using regex

variable "node_capacity_type" {

description = "Capacity type for the node group (ON_DEMAND or SPOT)"

type = string

validation {

condition = can(regex("^(ON_DEMAND|SPOT)$", var.node_capacity_type))

error_message = "node_capacity_type must be ON_DEMAND or SPOT"

}

}

List of strings that allows only specific values:

variable "allowed_instance_types" {

description = "List of allowed instance types (t2.micro, t3.micro, t3a.micro)"

type = list(string)

validation {

condition = alltrue([

for t in var.allowed_instance_types : contains(["t2.micro", "t3.micro", "t3a.micro"], t)

])

error_message = "allowed_instance_types can only contain t2.micro, t3.micro or t3a.micro"

}

}

Array length:

variable "private_eni_subnet_ids" {

description = "List of private subnet IDs for EKS control plane ENIs"

type = list(string)

validation {

condition = length(var.private_eni_subnet_ids) >= 2

error_message = "At least 2 private subnets are required for EKS control plane ENIs"

}

}

variable "node_group_max_size" {

description = "Maximum number of nodes in the node group"

type = number

validation {

condition = var.node_group_max_size >= 1 && var.node_group_max_size <= 10

error_message = "node_group_max_size must be between 1 and 10"

}

}

Templates

- https://github.com/aws-ia/terraform-repo-template

- https://github.com/dustindortch/template-terraform

Multiple environments

How to manage multiple environments with Terraform (Yevgeniy Brikman) - https://www.gruntwork.io/blog/how-to-manage-multiple-environments-with-terraform (Old URL)

Important: HashiCorp doesn't recommend using workspaces for multiple environments, see below.

https://dustindortch.com/2024/03/11/github-actions-release-flow/

There are a number of practices that have been in place within the community, some of which I will proclaim are bad. The practice described in “Terraform Up and Running” whereby repositories hold subdirectories for each deployment...

Terraform accommodates for differences with the use of variables. Instead of hardcoding differences, implement variables that allow for inputs that vary based on the requirements.

| Workspaces | Branches | Terragrunt | |

|---|---|---|---|

| Minimize code duplication | ■■■■■ | □□□□□ | ■■■■□ |

| See and navigate environments | □□□□□ | ■■■□□ | ■■■■■ |

| Different settings in each environment | ■■■■■ | ■■■■□ | ■■■■■ |

| Different backends for each environment | □□□□□ | ■■■■□ | ■■■■■ |

| Different versions in each environment | □□□□□ | ■■□□□ | ■■■■■ |

| Share data between modules | ■■□□□ | ■■□□□ | ■■■■■ |

| Work with multiple modules concurrently | □□□□□ | □□□□□ | ■■■■■ |

| No extra tooling to learn or use | ■■■■■ | ■■■■■ | □□□□□ |

https://docs.cloud.google.com/docs/terraform/best-practices/root-modules#separate-directories

Use separate directories for each application

To manage applications and projects independently of each other, put resources for each application and project in their own Terraform directories. A service might represent a particular application or a common service such as shared networking. Nest all Terraform code for a particular service under one directory (including subdirectories).

https://docs.cloud.google.com/docs/terraform/best-practices/root-modules#subdirectories

When deploying services in Google Cloud, split the Terraform configuration for the service into two top-level directories: a

modulesdirectory that contains the actual configuration for the service, and anenvironmentsdirectory that contains the root configurations for each environment.

-- SERVICE-DIRECTORY/

-- OWNERS

-- modules/

-- <service-name>/

-- main.tf

-- variables.tf

-- outputs.tf

-- provider.tf

-- README

-- ...other…

-- environments/

-- dev/

-- backend.tf

-- main.tf

-- qa/

-- backend.tf

-- main.tf

-- prod/

-- backend.tf

-- main.tf

https://docs.cloud.google.com/docs/terraform/best-practices/root-modules#environment-directories

To share code across environments, reference modules. Typically, this might be a service module that includes the base shared Terraform configuration for the service. In service modules, hard-code common inputs and only require environment-specific inputs as variables.

Each environment directory must contain the following files:

- A

backend.tffile, declaring the Terraform backend state location (typically, Cloud Storage)- A

main.tffile that instantiates the service module

https://docs.cloud.google.com/docs/terraform/best-practices/root-modules#tfvars

For root modules, provide variables by using a

.tfvarsvariables file. For consistency, name variable filesterraform.tfvars.

Don't specify variables by using alternative var-files or

var='key=val'command-line options. Command-line options are ephemeral and easy to forget. Using a default variables file is more predictable.

Don't do this: terraform plan -var-file="prod.tfvars"

Workspaces

https://developer.hashicorp.com/terraform/language/state/workspaces

Workspaces in the Terraform CLI refer to separate instances of state data inside the same Terraform working directory.

Terraform relies on state to associate resources with real-world objects. When you run the same configuration multiple times with separate state data, Terraform can manage multiple sets of non-overlapping resources.

https://developer.hashicorp.com/terraform/cli/workspaces

Every initialized working directory starts with one workspace named

default.

Note that all state is stored in a single backend (eg a single S3 bucket).

terraform workspace new development

terraform workspace new staging

terraform workspace select development

terraform workspace list

terraform workspace delete staging

https://docs.cloud.google.com/docs/terraform/best-practices/root-modules#environment-directories

Use only the default workspace.

Having multiple CLI workspaces within an environment isn't recommended for the following reasons:

- It can be difficult to inspect the configuration in each workspace.

- Having a single shared backend for multiple workspaces isn't recommended because the shared backend becomes a single point of failure if it is used for environment separation.

- While code reuse is possible, code becomes harder to read having to switch based on the current workspace variable (for example,

terraform.workspace == "foo" ? this : that).

Don't do this: instance_type = terraform.workspace == "production" ? "t3.large" : "t3.micro"

From HashiCorp When Not to Use Multiple Workspaces:

In particular, organizations commonly want to create a strong separation between multiple deployments of the same infrastructure serving different development stages or different internal teams. In this case, the backend for each deployment often has different credentials and access controls. CLI workspaces within a working directory use the same backend, so they are not a suitable isolation mechanism for this scenario.

https://www.gruntwork.io/blog/how-to-manage-multiple-environments-with-terraform-using-workspaces

all of your state for all of your environments ends up in the same (S3) bucket. This is one of the reasons that even HashiCorp’s own documentation does not recommend using Terraform workspaces for managing environments

VSCode extension

https://marketplace.visualstudio.com/items?itemName=HashiCorp.terraform

In addition to the Terraform extension, there is also the HCL syntax extension, which adds syntax highlighting for HCL files. Installing this extension optional, because Terraform syntax highlighting is already provided by the Terraform extension, since the HCL syntax extension "is a grammar only extension targeted to provide HCL syntax highlighting for files not already accounted for by a more specific product-focused extension".

To autoformat on save you need to modify the settings.json file (to open it do Cmd+Shift+P and select 'Preferences: Open User Settings (JSON)') as explained in https://marketplace.visualstudio.com/items?itemName=HashiCorp.terraform#formatting

It has code snippets.

It can handle multiple, separate folders (eg modules) using VSCode Workspaces - see docs.

JetBrains extension

https://plugins.jetbrains.com/plugin/7808-terraform-and-hcl

Docs: https://www.jetbrains.com/help/idea/terraform.html

There are 2 formatters. To use the terraform fmt formatter instead of the JetBrains formatter, go to Preferences → Advanced Settings → Terraform and enable the option 'Terraform fmt'.

You can format a file with Code → Terraform tools → Format file, but is not very ergonomic. To enable auto-formatting, add a file watcher (see picture in this StackOverflow answer):

- Go to Preferences → Tools → File Watchers

- Click the + symbol (Add)

- At the drop-down list, select 'terraform fmt', which should have the following options pre-filled:

- Name: terraform fmt

- Files to Watch:

- File type: Terraform config files

- Scope: Project files

- Tool to Run on Changes:

- Program:

$TerraformExecPath$ - Arguments:

fmt $FilePath$ - Output paths to refresh:

$FilePath$ - Working directory: leave it empty

- Environment variables: leave it empty

- Program:

- Advanced settings: leave it as it is. Should have only one option checked: Trigger the watcher on external changes

Tools

What are the tools I should be aware of and consider using? - https://www.terraform-best-practices.com/faq#what-are-the-tools-i-should-be-aware-of-and-consider-using